3 Ways to Effectively Demystify the AI Black Box

Better understanding of the technology can lead to more transparency in how the system operates, which leaves less room for questioning AI processes.

Artificial intelligence has demonstrated immense promise when applying machine learning to support the overall processing of large datasets, particularly in the banking and financial services industry. Sixty percent of financial services companies have implemented at least one form of AI, ranging from virtual assistants communicating with customers and the automation of workflows to managing fraud and network security.

Despite these advancements in efficiency and automation, complexities from the inner workings of AI models often create a “black box” issue. This largely stems from lack of understanding of how the system works and a continual concern around opacity, unfair discrimination, ethics and dangers to privacy and autonomy. In fact, the lack of transparency in system operation is frequently linked to hidden biases.

While often unintentional, there are many different types of bias in AI, including algorithm, sample, prejudice, measurement and exclusion bias. These biases can create increased consumer friction, poor customer service, fewer sales and revenue, unfair or illegal behaviors, and potential discrimination.

AI credit scoring is a key example of how financial institutions are using digital identity or social media to determine a consumer’s spending habits or the consumer’s ability to repay debts. Ultimately, the goal is to eliminate biases in the AI algorithms to create accurate credit ratings.

So, how do we, as an industry, overcome these inherent biases?

Here are 3 ways to demystify the black-box of AI and navigate bias:

Establishing trustworthy AI

Understanding how the AI system operations work creates the ability for users to confidently understand and better navigate biases. Financial institutions can do this by establishing trustworthy AI, which is the notion that AI will realize its full potential once trust can be developed at every level of the process. Successful Trustworthy AI requires several components including, privacy, robustness and explainability.

Achieving trustworthy AI requires close examination and the ability to identify what factors contribute to each bias to make a more informed decision about what actions should be taken after identification. It is undeniable that trustworthy AI is a multi-layered area that requires increased awareness. As such, organizations must shed light on the need for increased awareness of AI ethics for those who examine and work with AI, as well as understand the risks and potential impact of AI and ways to mitigate the risks.

Understanding explainable AI

Explainable AI (XAI) helps eliminate inherent biases that exists in AI-based risk assessment. Stakeholders involved in the lifecycle of an AI system may be able to determine why AI made certain decisions and where it may have gone differently. For example, when examining an alert for a transaction/login using AI-based risk assessment and fraud detection, it is necessary that we easily understand and interpret why an event was detected as fraud.

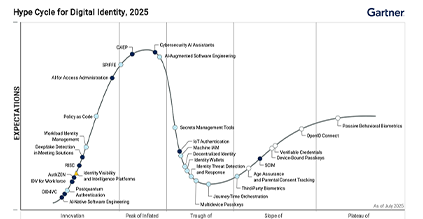

As explainable AI systems are implemented, it is critical that information officers assess what level of stakeholder comprehension is required as AI technologies are developed, as there is a strong likelihood that they may be required to make their AI platforms explainable to their engineers, legal team, compliance officers and auditors. In fact, Gartner predicts that, by 2025, 30% of government and big company contracts for AI products and services will mandate the usage of explainable and ethical AI.

Creating clear regulations

To achieve effective regulation and standardization of AI’s potential risks, we need the involvement of critical stakeholders across government, industry and academia. Governments are releasing new AI regulation initiatives, such as the European Commission’s guidelines for trustworthy AI released earlier this year. These guidelines outline how to make AI systems fair, safe, transparent, and beneficial to users.

In addition, the National Institute of Standards and Technology in the United States is developing standards and tools to verify that AI is reliable. Through these new efforts for standardization, AI technology can be better understood and managed to help regulate biases unintentionally created in AI systems. This results in more transparency in how the system operates, leaving less room for questioning AI processes.

The financial services industry has continually made strides to demystify the AI black box. By learning how to navigate these current biases through enhanced awareness around ethical AI, understanding explainable AI and instituting clear regulations and guidelines to ensure systems are fair, the financial services industry can overcome these inherent biases bringing us closer to trustworthy AI.

This blog, written by Ismini Pschoula, research scientist at OneSpan, was first published on BAI.org on March 11, 2022.